Why I'm Pairing Generative AI with Vector Databases

Generative AI is powerful, but it has a major blind spot: it doesn't know your business data. In this post, I share why I've been working on developing systems that pair LLMs with vector databases to solve this exact problem. Discover how this combination acts as a "long-term memory" for AI, eliminating hallucinations and transforming the technology from a creative toy into a grounded, reliable business tool.

Jonathan Nieves

11/30/20252 min read

Why I'm Pairing Generative AI with Vector Databases

I’ve been working on developing AI applications for a while now, and the initial thrill of GPT-4 faded once I hit the "LLM wall." While these models are brilliant generalists, they know absolutely nothing about my company’s internal data. When asked about specific, private documents, they simply hallucinate—making up plausible but wrong answers.

That’s when I shifted my focus. I’ve been working on developing systems that pair generative AI with vector databases, and it turns out to be the missing link that makes AI truly useful for business.

The Problem: LLMs Have Amnesia

You can’t just paste a 5,000-page manual into ChatGPT. Standard databases are great for exact matches (keywords), but they fail at understanding nuance. You need a way to connect the AI’s reasoning abilities with your specific, proprietary knowledge without retraining the whole model.

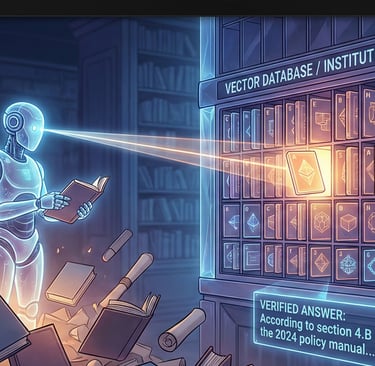

The Solution: Vector Databases as "Long-Term Memory"

A vector database turns text into numbers (vectors) that represent meaning. This allows the system to find data that is conceptually similar, not just keyword-identical.

Here is the workflow (often called RAG) that I use:

The Search: A user asks a question. The system queries the vector database to find the most relevant paragraphs from your internal files.

The Retrieval: It pulls those specific text snippets instantly.

The Answer: It feeds those snippets to the generative AI and says, "Answer the user's question using only this information."

The result? Fluent answers grounded in facts. No hallucinations.

Real-World Utility

This combination has skyrocketed the utility of the apps I'm building. Here is how:

Chatting with Data: I’ve been developing internal tools that let employees query massive repositories of PDFs and contracts. Instead of "Ctrl+F," they ask natural questions and get synthesized answers citing the exact source document.

Hyper-Personalization: Unlike standard recommendation engines, vector DBs capture the "vibe" of a user’s taste. If a user likes "minimalist" products, the AI can find semantically similar items across different categories and explain why it fits their style.

Smarter Support: We are feeding vector databases thousands of past support tickets. When a new issue arrives, the system finds similar past problems and drafts a solution based on what worked before, giving agents instant institutional memory.

The Takeaway

Generative AI is the engine, but your data is the fuel. Working with vector databases has convinced me that the future of AI isn't just about smarter models; it's about connecting those models to the truth. If you want AI to be useful, you have to give it a memory.

Contact Us

Forms

Email: info@issimus-automation.com

Phone number: +1 (939) 865-2727

© 2025. All rights reserved.